Come Back Again With This Code

Agreement Async, Fugitive Deadlocks in C#

You ran into some deadlocks, yous are trying to write async code the proper style or maybe y'all're just curious. Somehow you ended upwardly here, and you want to fix a deadlock or ameliorate your code.

I'll effort to proceed this concise and practical, and for further reading bank check out the related articles. To write proper async C# code and avert deadlocks yous need to understand a few concepts.

Setting up good practices can help fugitive common issues, but sometimes that's non enough, and that'south when yous demand to understand what'southward happening below the brainchild layers.

You should already be familiar with async lawmaking, there are many manufactures that discuss on how to use information technology, but non many explain how they work. If you're non familiar at all I recommend at least reading something nigh it. Ideally you should already take some experience using async functions.

Tasks or Threads?

Tasks take null to practise with Threads and this is the crusade of many misconceptions, especially if you accept in your might something like "well a Task is like a lightweight Thread". Job is not thread. Task does not guarantee parallel execution. Job does not belong to a Thread or anything like that. They are ii separate concepts and should be treated equally such.

Job represents some work that needs to exist washed. A Task may or may not be completed. The moment when it completes can be right now or in the time to come.

The equivalent in some many languages is the Promise. A Chore can exist completed only similar how a Promise can be fulfilled. A Task can be faulted but similar how a Hope can be rejected. This is the only affair that a Chore does, it keeps track whether a some work has been completed or not.

If the Task is completed and not faulted and so the continuation task volition be scheduled. Faulted state means that in that location was an exception. Tasks have an associated TaskScheduler which is used to schedule a continuation Task, or whatsoever other child Tasks that are required past the electric current Task.

Threads are a completely different story. Threads only as in whatsoever OS stand for execution of code. Threads continue runway what you lot execute and where y'all execute. Threads accept a call stack, store local variables, and the address of the currently executing education. In C# each thread likewise has an associated SynchronizationContext which is used to communicate between different types of threads.

C# uses Threads to run some code and mark some Tasks as beingness completed. For performance reasons there is commonly more than one thread. Then Threads execute Tasks… simple you might call up… only that's not the whole motion picture. The whole pic looks await something like this:

Threads execute Tasks which equally scheduled past a TaskScheduler.

What Does await Actually Practise?

Let's start with an instance. This is how yous would properly implement an I/O leap performance. The application needs to request some data from a server. This does not apply much CPU, so to use resource efficiently we use the async methods of HttpClient.

The proper async / wait version:

public async Chore<String> DownloadStringV1(String url)

{

// good code

var asking = await HttpClient.GetAsync(url);

var download = wait request.Content.ReadAsStringAsync();

return download;

} The code example should exist obvious what it does if you are at least a bit familiar with async/expect. The asking is washed asynchronously and the thread is free to work on other tasks while the server responds. This is the platonic example.

But how does async expect manage to practice information technology? It's nothing special, merely a little chip of syntactic sugar over the following lawmaking. The aforementioned async look tin can exist achieved by using ContinueWith and Unwrap.

The following code instance does the same thing, with minor differences.

ContinueWith / Unwrap version (this is still async):

public Task<String> DownloadStringV2(String url)

{

// okay code

var request = HttpClient.GetAsync(url);

var download = request.ContinueWith(http =>

http.Outcome.Content.ReadAsStringAsync());

return download.Unwrap();

} Really, that'due south all what async/await does! It volition schedules tasks for execution and once a task is washed some other task is scheduled. Information technology creates something like a chain of tasks.

Everything you lot do with async and await stop up in an execution queue. Each Job is queued up using a TaskScheduler which tin can do annihilation it wants with your Task. This is where things get interesting, the TaskScheduler depends on context you are currently in.

Code that might work in some contexts…

Allow'due south wait at the same DownloadString function, but this fourth dimension it's implemented in a bad fashion. This might however work in some cases.

This blazon of code should be avoided, they should never be used in libraries that can be called from different contexts.

The following example is a sync version which achieves the same thing, but in a very, very unlike way. It blocks the thread. We're getting to unsafe territory. It's radically different from the code above and should never be considered an equivalent implementation.

Sync version, blocks the thread, not condom:

public String DownloadStringV3(String url)

{

// NOT Prophylactic, instant deadlock when called from UI thread

// deadlock when called from threadpool, works fine on console

var request = HttpClient.GetAsync(url).Upshot;

var download = request.Content.ReadAsStringAsync().Event;

return download;

} The lawmaking in a higher place volition also download the string, but it will cake the calling Thread while doing so, and information technology that thread is a threadpool thread, then information technology will lead to a deadlock if the workload is loftier enough. Let's come across what information technology does in more item:

- Calling HttpClient.GetAsync(url) will create the request, it might run some function of it synchronously, just at some signal information technology reaches the part where information technology needs to offload the work to the networking API from the OS.

- This is where it will create a Task and return information technology in an incomplete state, so that you can schedule a continuation.

- But instead y'all have the Effect property, which will blocks the thread until the chore completes. This just defeated the whole purpose of async, the thread can no longer work on other tasks, it's blocked until the request finishes.

The problem is that if you blocked the threads which are supposed to work on the Tasks, then in that location won't be a thread to complete a Chore.

This depends on context, so it'due south important to avoid writing this blazon of code in a library where y'all have no control over the execution context.

- If you are calling from UI thread, you will deadlock instantly, as the chore is queued for the UI thread which gets blocked when information technology reaches the Result holding.

- If called from threadpool thread and then a theadpool thread is blocked, which will atomic number 82 to a deadlock if the work load is high plenty. If all threads are blocked in the threadpool then there volition be nobody to complete the Task.

- Only this example volition work if you're calling from a main or dedicated thread. (which does not belong to threadpool and does non accept syncronization context)

Let's wait an example which is just equally bad, only can work fine in other cases.

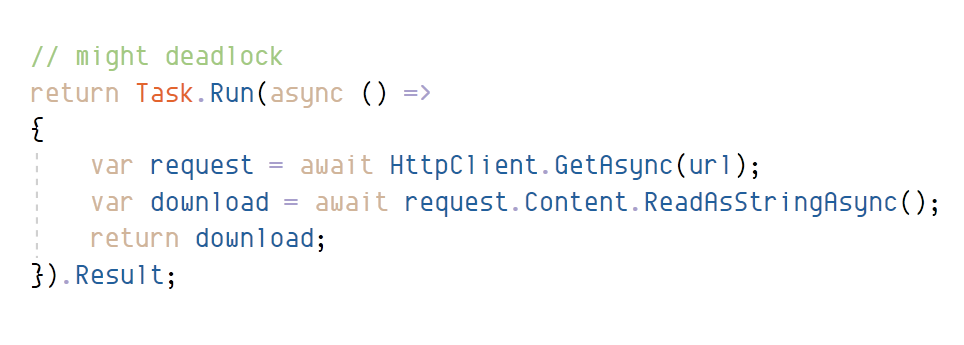

Sync version, defeats the purpose, blocks the calling thread and definitely not safe:

public String DownloadStringV4(Cord url)

{

// Non Safety, deadlock when called from threadpool

// works fine on UI thread or console main

return Task.Run(async () => {

var asking = wait HttpClient.GetAsync(url);

var download = expect asking.Content.ReadAsStringAsync();

return download;

}).Issue;

} The code above likewise blocks the caller, but it dispatches the work to the threadpool. Job.Run forces the execution to happen on the threadpool. And so if called from a different thread than a threadpool thread, this is really pretty okay fashion to queue work for the threadpool.

- If you have a classic ASP.NET awarding or a UI application, y'all can call async functions from sync function using this method, then update the UI based on the result, with the caveat that this blocks the UI or IIS managed thread until the work is done. In case of the IIS thread this is not a huge problem as the request cannot complete until the piece of work is non washed, but in case of a UI thread this would make the UI unresponsive.

- If this code is called from a threadpool thread, so once more information technology will atomic number 82 to a deadlock if the work load is high enough considering it'southward blocking a threadpool thread which might be necessary for completing the chore. All-time is to avoid writing code like this, especially in context of library where you have no control over the context your code gets called from.

And now allow's wait a the final version, which does horrible things…

Deadlock version. Dont write this:

public String DownloadStringV5(String url)

{

// Really REALLY BAD Lawmaking,

// guaranteed deadlock

return Task.Run(() => {

var request = HttpClient.GetAsync(url).Upshot;

var download = request.Content.ReadAsStringAsync().Effect;

return download;

}).Event;

} Well lawmaking above is a bit of an exaggeration, just to prove a point. It's the worst possible thing that yous can exercise. The code above will deadlock no matter what context yous are calling from because it schedules tasks for the threadpool and then it blocks the threadpool thread. If called enough times in parallel, information technology will exhaust the threadpool, and your application will hang… indefinitely. In which case the all-time thing you tin do is a memory dump and restart the application.

What Causes a Deadlock?

Job.Look() does. That would exist the cease of story just sometimes it cannot exist avoided, and information technology's not the only example. Deadlock might also be cause by other sort of blocking code, waiting for semaphore, acquiring as lock. The communication in general is simple. Don't block in async code. If possible this is the solution. There are many cases where this is not possible to do and that'southward where almost problems come from.

Here is an instance from our own codebase.

Yep! This causes a deadlock!

public String GetSqlConnString(RubrikkUser user, RubrikkDb db)

{

// deadlock if called from threadpool,

// works fine on UI thread, works fine from console main

return Task.Run(() =>

GetSqlConnStringAsync(user, db)).Result;

} Look at the code higher up. Try to empathize it. Try to judge the intent, the reason why it's written like this. Try to guess how the code could fail. Information technology doesn't thing who wrote it, anyone could take written this. I wrote code like this that'south how I know it deadlocks.

The problem the programmer is facing that the API they are supposed to call is async simply, merely the function they are implementing is sync. The problem tin can be avoided altogether by making the method async as well. Problem solved.

Merely, it turns out that you need to implement a sync interface and you lot are supposed to implement using API which has async just functions.

The execution is wrapped within a Task.Run, this volition schedule the job on the threadpool the block the calling thread. This is okay, every bit long every bit the calling thread is non a threadpool thread. If the calling thread is from the threadpool and then the following disaster happens: A new task is queued to the end of the queue, and the threadpool thread which would eventually execute the Task is blocked until the Chore is executed.

Okay and so nosotros don't wrap in inside a Chore.Run, we get the following version:

This nonetheless causes a deadlock!

public String GetSqlConnString(RubrikkUser user, RubrikkDb db)

{

// deadlock from UI thread, deadlock if called from threadpool,

// works fine from console main

return GetSqlConnStringAsync(user, db).Consequence;

} Well it got rid of an extra layer of task, which is good and the chore is scheduled for the electric current context. What does this mean? This means that the lawmaking volition deadlock if threadpool is already exhaused or instantly deadlock if called from UI thread, so it solves null. At the root of the problem is the .Issue property.

So at this point y'all might think, is in that location a solution for this? The answer is complicated. In library code in that location is no easy solution as you cannot assume nether what context your code is chosen. The best solution is to only call async lawmaking from async code, blocking sync APIs from sync methods, don't mix them. The awarding layer on summit has knowledge of the context it's running in and can choose the appropriate solution. If chosen from a UI thread information technology tin schedule the async task for the threadpool and cake the UI thread. If called from threadpool then you might need to open additional threads to make certain that in that location is something to end. Merely if y'all include transition like this from sync to async code within a library, then the calling code won't be able to do control the execution and your library will fail in with some applications or frameworks.

Library code should be written without whatsoever assumption of synchronization context or framework which calls from. If you need to back up both blocking sync and async interface, then yous must implement the part twice, for both versions. Don't fifty-fifty think about calling them from each other for code reuse. Yous have 2 options, either make your function blocking sync, and utilize blocking sync APIs to implement it, or brand your part async and use async APIs to implement it. In example you need both you can and should implement both separately. I recommend ditching blocking sync entirely and simply using async.

Other solutions include writing your own TaskScheduler or SyncronizationContext, and then that you have control over the execution of tasks. There are enough of articles on this, if you have free time, give it a try, it's a good practise and you lot'll gain deeper insight than any article can provide.

SyncronizationContext? TaskScheduler?

These control how your tasks are executed. These volition determine what y'all tin can do and can not do when calling async functions. All that async functions do is to schedule a Job for the current context. The TaskScheduler may schedule the execution in whatsoever style it pleases. You can implement your own TaskScheduler and exercise whatever you want with it. You can implement your ain SyncronizationContext too and schedule from there.

The SyncronizationContext is a generic way of queuing piece of work for other threads. The TaskScheduler is an abstraction over this which handles the scheduling and execution of Tasks.

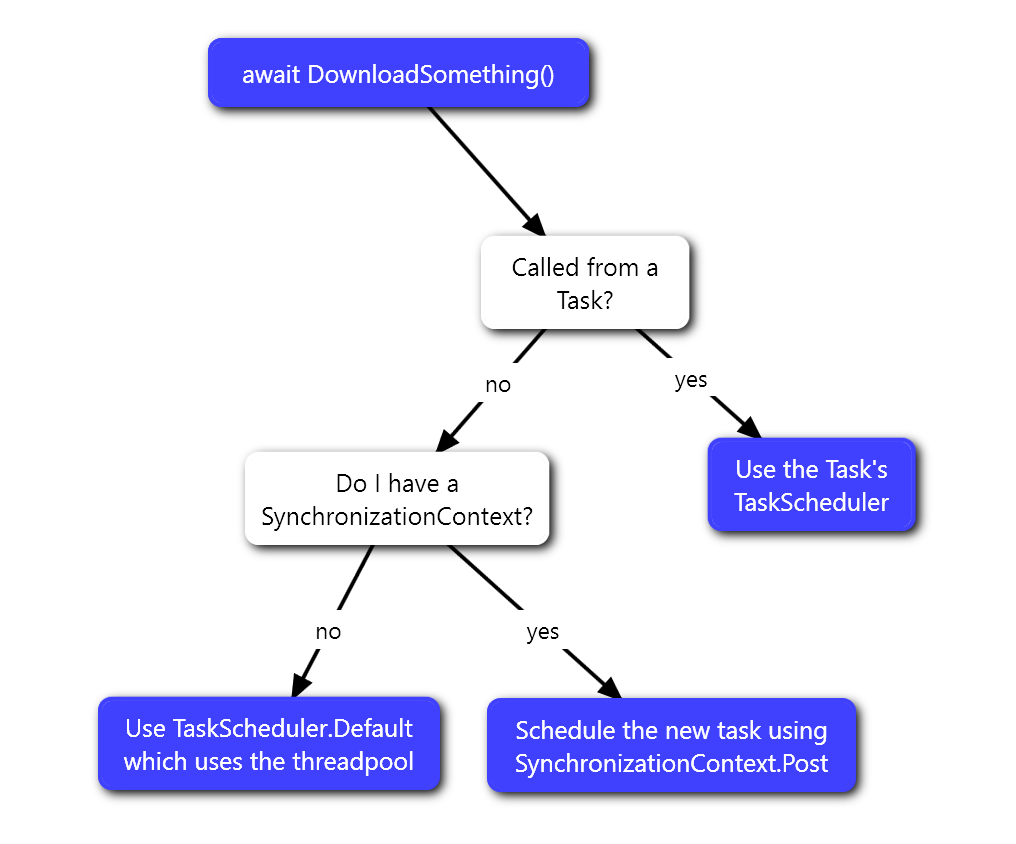

When you create a chore past default C# will employ TaskScheduler.Current to enqueue the new task. This will employ the TaskScheduler of the current task, but if there is no such affair and then checks if there is a synchronization context associated with the current thread and uses that to schedule execution of tasks using SynchronizationContext.Post, but if in that location is no such thing and so it volition use the TaskScheduler.Default which volition schedule work in a queue that gets executed using the thread pool.

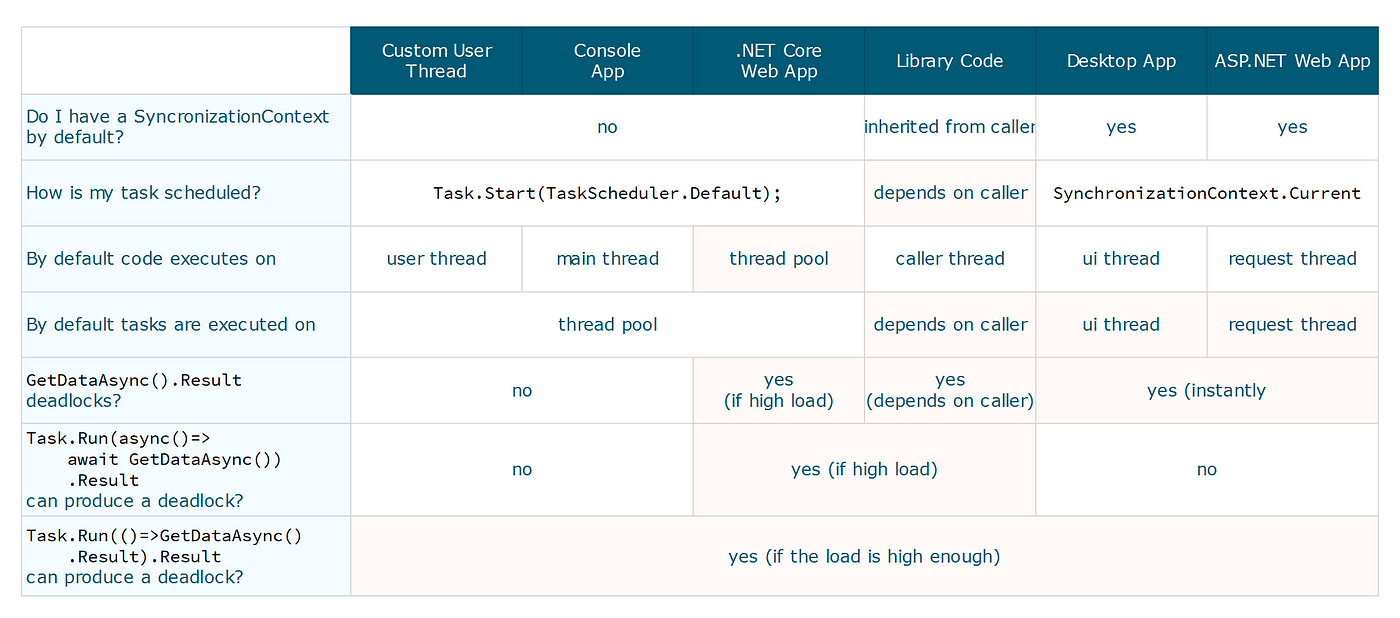

Those are a lot of complicated things to consider at the same fourth dimension, so permit'south suspension it downward into several common cases:

- In panel applications by default you don't have a synchronization context, but you lot have a main thread. Tasks will be queued using the default TaskScheduler and volition be executed on the thread pool. You can freely cake your main thread it will simply finish executing.

- If you create a custom thread, by default you dont accept a syncronization context, it's just like having a console application. Tasks get executed on the thread puddle and y'all tin block your custom thread.

- If you are in a thread pool thread, then all following tasks are also executed on the thread pool thread, but if you have blocking lawmaking hither and then the threadpool volition run out of threads, and y'all will deadlock.

- If you lot are in a desktop UI thread, you have a synchronization context, and by default tasks are queued for execution on the UI thread. Queued tasks are executed one by one. If you cake the UI thread there is nothing left to execute tasks and you have a deadlock.

- If you're writing a dotnet cadre spider web awarding, you're basically running everything on the thread pool. Any blocking code will block the thread pool and whatsoever .Consequence will pb to a deadlock.

- If you're writing a ASP.NET web application, and then yous have theads managed by IIS which will allocate i for each request. Each of these threads has its own syncronization context. Tasks become scheduled for these threads by default. You need to manually schedule for the threadpool for parallel execution. If y'all call .Effect on a task which is enqueued for the request thread, you volition instantly deadlock.

- If you're writing a library, y'all take no thought what lawmaking is calling your code, and mixing async code with sync code, or calling .Result will almost certainly make an application deadlock. Never mix async and sync code in a library.

How to Write Good Async Lawmaking?

Until at present we talked most good cases, bad cases and cases that work in some cases. But what about some practices to follow? It depends. It's not easy to enforce common practices because how async/await works depends on the context. Only these should exist followed in library lawmaking.

- Only call async code simply from async code. (dont mix sync with async)

- Never block in async code. (never .Result, never lock)

- If y'all need a lock, use SemaphoreSlim.WaitAsync()

- Utilize async/await when dealing with Tasks, instead of ContinueWith/Unwrap, it makes the lawmaking cleaner.

- It's okay to provide both sync and async version of API, just never call one from the other. (this is i of the rare cases when code duplication is acceptable)

Understanding all the concepts that relate to async tin take some fourth dimension. Until you do that, here is a cheat canvass that gives you what you can do and cannot practice in each context. This is non a comprehensive list and that the deadlock categorization is more towards a strict side which means that you lot it might still work in some cases simply will deadlock in production. There can be other types of blocking code similar Thread.Slumber or Semaphore.WaitOne but these will not crusade a deadlock on it's ain, but will increase run a risk of deadlocking if there is a .Result somewhere.

Debugging Methodology

Y'all take a deadlock in your code? Peachy! The important part is to identify it. It tin can exist from any Chore.Result or Task.Await or possibly other blocking code. It'south like searching for a needle in a haystack.

Memory Dumps Help a Lot!

If you find your application in a deadlocked state, accept a retention dump of your application. Azure has tools for this on portal, if not there are plenty of guides for this. This will capture the state of your awarding. DebugDiag 2 Analysis can automatically clarify the retentivity dump. You lot demand to the stack trace on the threads to see where the lawmaking is blocked. Upon code review you will find a statement there which blocks the electric current thread. You demand to remove the blocking statement to fix the deadlock.

Reproducing the Deadlock

The other arroyo is to reproduce the deadlock. The method you lot tin utilize hither is stress testing, launch many threads in parallel and run into if the application survives. However this might not be able to reproduce bug, especially if the async tasks complete fast plenty. A better approach is to limit the concurrency of the thread pool, when the awarding starts to i. This means that if yous have any bad async code where a threadpool thread would cake and so information technology definitely will cake. This second approach of limiting concurrency is also better for functioning. Visual Studio is actually slow if there are a lot of threads or tasks in y'all application.

Some Corrections:

As it has been pointed out, my examples don't piece of work they are supposed to, this is because I have simplified them also much in gild to go my ideas across more easily. (I hope it worked!)

When you phone call an async method which is elementary plenty, information technology might work even if its wrongly used. Also, in general the classic .NET framework is more than forgiving due to it having defended threads that y'all can block. You will experience this when porting from the forgiving .Net framework to the more harsh .NET Core if the original project has desperately written async code that "worked at the time".

Some of the examples rely on having a high load, this is something that's hard to exam and ordinarily happens when information technology's also late: in production.

In one of the comment I've added a pull request to properly reproduce some of the problems in the example by creating an async method which is a bit more complex than the one in my example:

https://bitbucket.org/postnik0707/async_await/pull-requests/1

See the comments, read the related articles, and test everything for yourself that you don't believe, if possible, simulate high load and limit thread count in thread puddle to reproduce my results more hands.

Source: https://medium.com/rubrikkgroup/understanding-async-avoiding-deadlocks-e41f8f2c6f5d

0 Response to "Come Back Again With This Code"

Post a Comment